When Akamai noticed the increase in traffic to a page, the company could think very easily: a new day, a new DDoS attack.

The attacks DDoS is a common, accessible attack method that uses excessive traffic to disrupt a website's operations. This traffic is often produced by botnets consisting of slave devices (compromised and added to the botnet) from PCs to devices of Internet of Things (IoT), routers, smartphones, which simultaneously visit a website.

Sudden and bulk visits increase the traffic of a website vertically, can burden the systems and prevent the connection to normal users.

One of the biggest DDoS attacks that occurred in the past was the attack on GitHub last year, culminating in 1,3 Tbps.

In the above case, (the incident occurred at the beginning of 2018), the increase in visitation was found on a website belonging to an Akamai client in Asia, according to a case study to be published by the company on Wednesday.

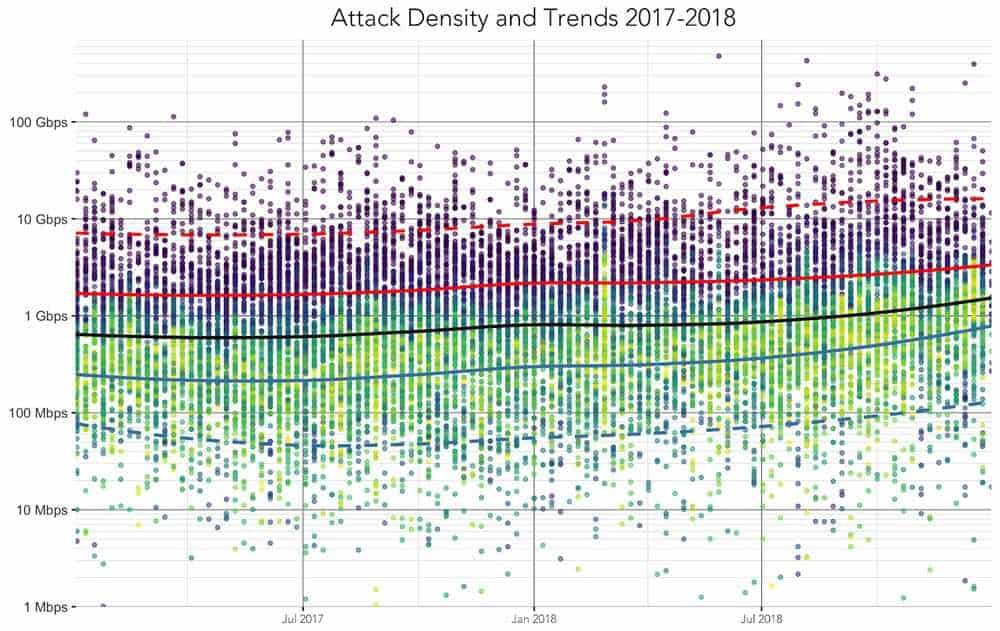

The initial increase in traffic (over four billion requests) was so great that it was close to throwing the recording systems. On average, the page received 875.000 requests per second with traffic volumes reaching 5,5 Gbps.

Such a huge volume of traffic, with no specific reasons, makes each of us think it is a typical DDoS attack.

However, the company that received the "attack" learned a great lesson about how a buggy code can be just as disruptive as an external cyber attack.

The incident was reported to Akamai's Security Operations Control Center (SOCC by Security Operations Command Center), which began examining traffic flows a few days before the day of the "attack" with the help of SIRT investigators.

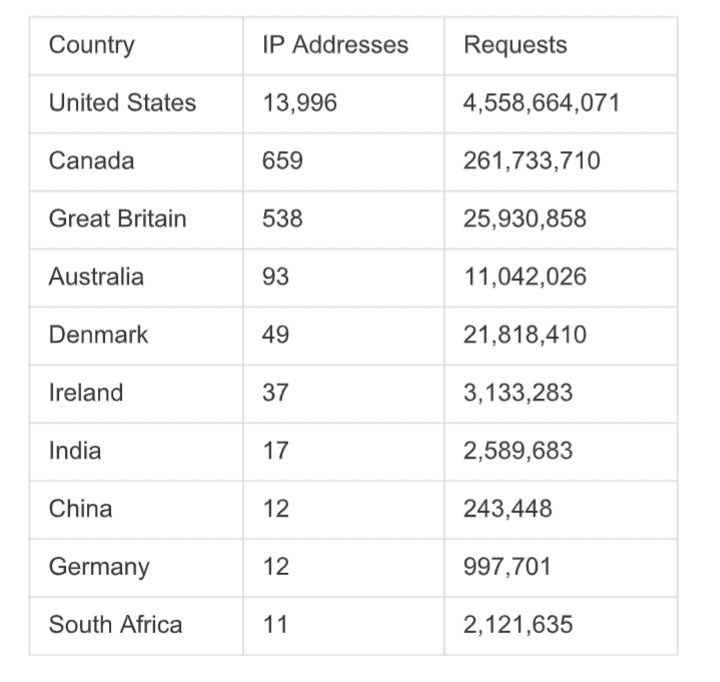

"There were 139 IP addresses that approached the client's URL a few days before the 'attack,'" Akamai said. That URL received 643 requests, over four billion requests, in less than a week. ”

About half of the IP addresses were marked as network address translation (NAT) gateways, and this traffic was later discovered to be generated by a Microsoft Windows COM Object, WinhttpRequest.

The standard traffic received by the domain before the event contained GET and POST requests. However, malicious traffic sent only POST requests.

"Examining all the POST requests that hit our client's URL, we found that the User-Agent fields were not fake or corrupted in any way, which reinforced the researchers' idea that the requests came from Windows itself."

SOCC was able to mitigate most of the strange requests over the next 28 hours, discovering that the traffic was "the result of an internal tooluh.”

The problem was buggy, not botnet. Warranty tool bugs have consistently sent POST requests to the domain automatically and at a fairly high frequency, which threw the site.

It is important to note that not all bots are malicious, and that there are used for legitimate purposes such as warranty systems, search engines, archiving and content accumulation.

______________________

- See the history of anyone's Facebook relationships

- IBM Q System One is the first quantum in the box

- Denuvo: when cracked games work better than normal

- Gmail: add strikethrough, undo & restore