When trying to hack a website, it can be extremely useful to get the parameters of various pages. These may include php, woff, css, js, png, svg, php, jpg and more. Each of these parameters can indicate a specific vulnerability, such as SQLi, XSS, LFI, and others. When we have discovered the parameters, we then check each of them for vulnerabilities.

This can be especially useful in bug bounty.

There are various tools we can use to scan a website, such as OWASP-ZAP or Web Scarab, but these tools can be very noisy and offer no stealth.

Any experienced security technician will notice the traffic and fast requests. One of the ways to avoid this detection is to scan the website archive on archive.org (as you know, archive.org maintains a repository of all past websites). Of course, these files probably won't be identical to the live site, but they probably have a lot in common data to minimize false positives while at the same time not alerting the site owner.

There is a great tool for finding these parameters using archived sites called ParamSpider.

Step #1: Download and Install ParamSpider

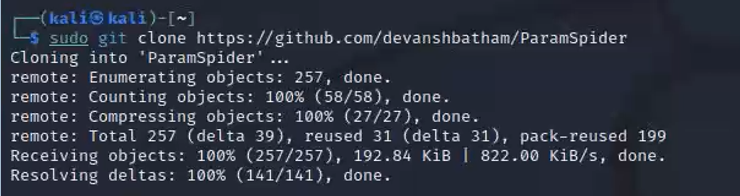

Our first step is to download and then install paramspider. We can use git clone to clone it to our system.

kali > sudo git clone https://github.com/devanshbatham/ParamSpider

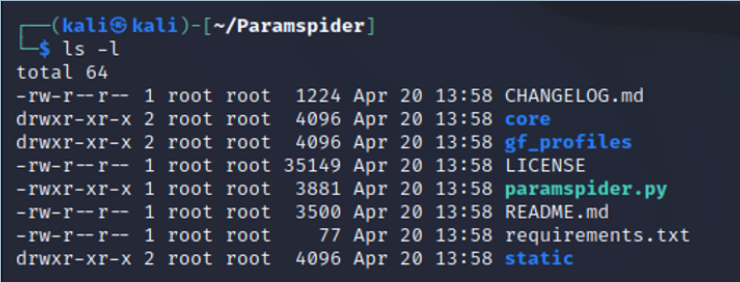

Now, navigate to the new directory ParamSpider and record the contents of the directory.

kali > cd Paramspider

kali > ls -l

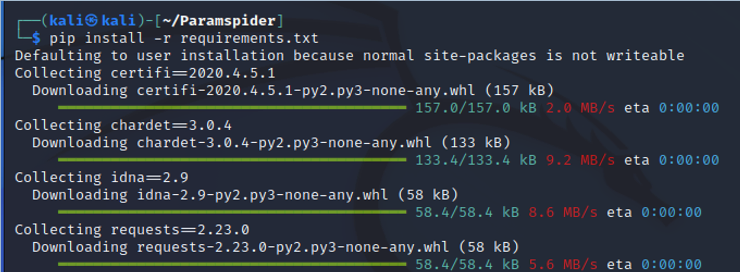

Mark the file requirements.txt. We can use this file to load all the requirements of this tool using the command pip as we write below,

kali > pip install -r requirements.txt

Now, we are ready to run paramaspider!

Step #2: Launch ParamSpider

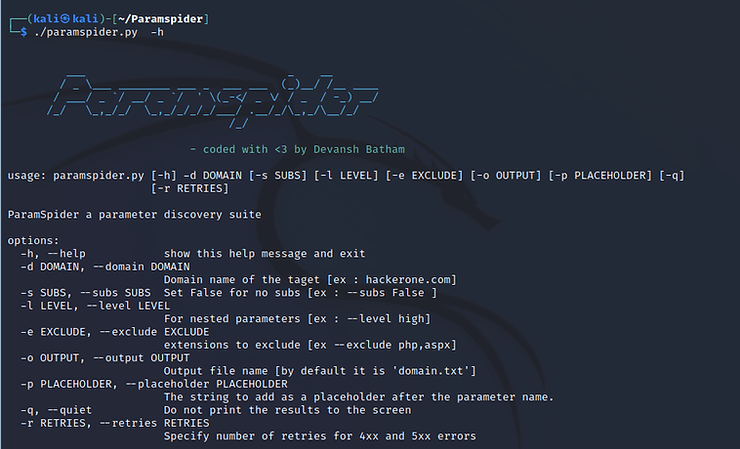

Now that we have paramspider installed, let's check out the help screen.

In its simplest form, the paramspider syntax is simply the command plus -d (domain) followed by name of the domain. For example, if we want to scan Elon Musk's tesla.com, we simply type,

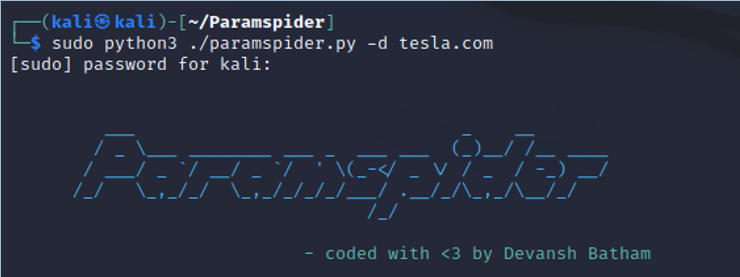

kali > sudo python3 ./paramspider.py -d tesla.com

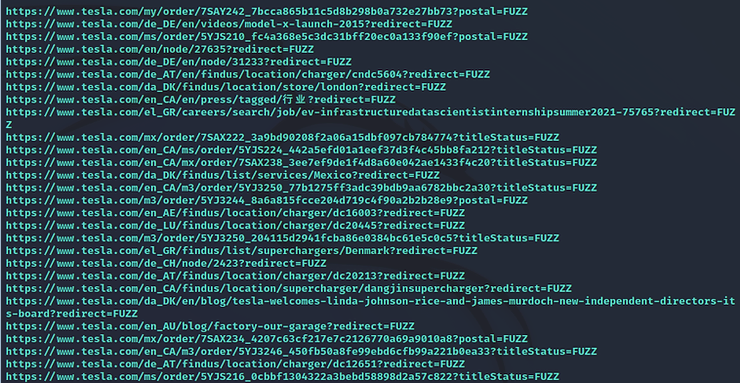

When you press enter, you'll see paramspider crawl the tesla.com website at archive.com looking for various parameters in the URLs.

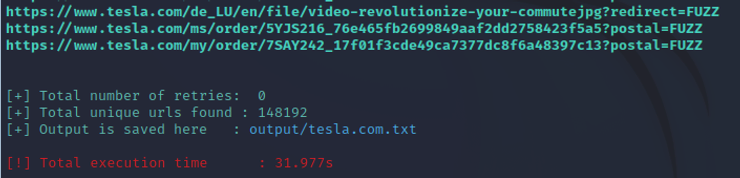

As you can see above, paramspider found 148.192 unique tesla.com URLs and Elon Musk doesn't even know we scanned them!

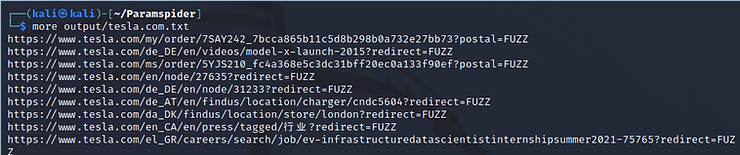

To see the results, we can simply use the command more followed by the name of the output file (by default, the output file is located in the output directory with a file name of the domain with the extension .txt). In this case, we can simply type:

kali > more output/tesla.com.txt

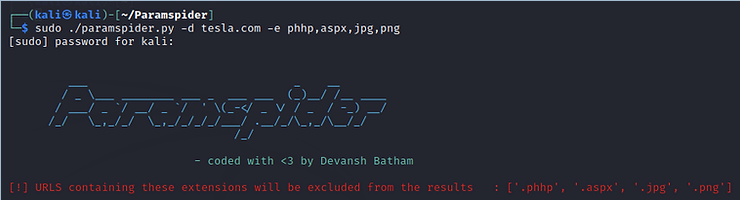

In some cases, we may not want to see ALL the parameters. For example, we may not want to see those ending in php, jpg, png, aspx, etc. This will help narrow your focus to fewer parameters to test. We exclude some parameters using switch -e, such as

kali > sudo python3 ./paramspider.py -d tesla.com -e php, aspx, jpg, png

Summary

In hacking web applications, it can be very beneficial to find parameters that are often vulnerable to a particular type of attack.

With a tool like paramspider, we can enumerate and log these URLs and then use them for testing at a later time space without alarming the site owners.

While far from perfect, it can give us some insight into potential vulnerabilities as well as vulnerabilities that have been mitigated (if a certain type of vulnerability existed in one part of the site, it's very likely that other similar vulnerabilities exist ). ParamSpider is another valuable tool in the hacker's toolbox