Every day that passes brings us closer to the virtual reality that Facebook serves us like metaverse.

The bad news is that this is a virtual world where a monopoly company is starting to emerge.

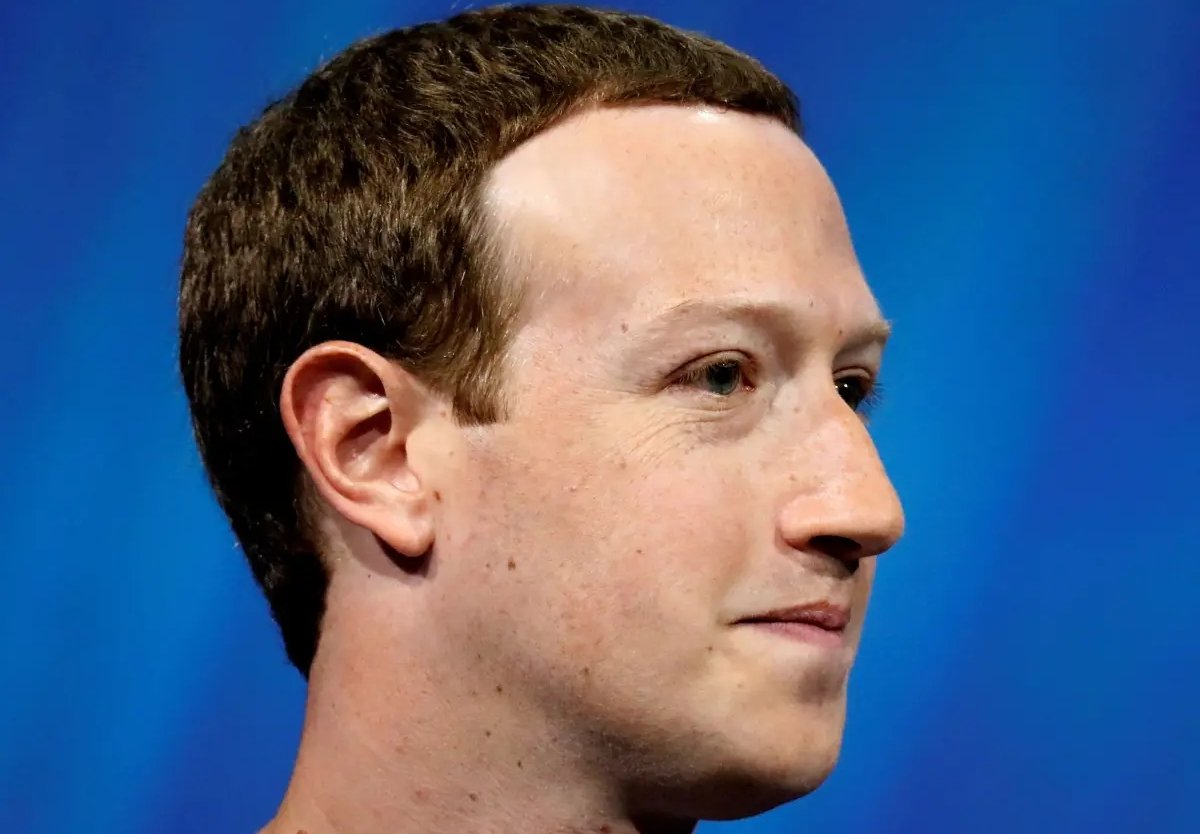

The company continues to grow according to the vision of its CEO Meta, Mark Zuckerberg, and apparently includes a "robot skin".

In one published on Monday, Meta's artificial intelligence division - the company formerly known as Facebook - has announced it is working with Carnegie Mellon University in Pennsylvania to develop a lightweight, robotic skin-like touch sensor that will help researchers develop tactile sense of artificial intelligence in the construction of the post-universe.

“When you're going to wear one device Meta, θα θέλετε θα παρέχονται και μερικά απτικά, ώστε οι users to be able to feel with more senses," Meta researcher Abhinav Gupta said in an interview, according to CNBC.

Also known as ReSkin, the new open source "skin" will power artificial intelligence researchers who want to "exploit the richness of the sense of touch the way humans do" to incorporate touch into their models.

According to Meta, the skin will use her engineering learning and magnetic detection to automatically calibrate its own sensor. We are talking about a "skin" that will be able to share data between systems, essentially solving the problem of training a new skin every time we want to use it in a new one system.

According to Meta, ReSkin will transmit data magnetically rather than electronically, using data from multiple sensors and self-supervised learning models so that the sensors can be calibrated independently.

"A strong sense of touch is a major hurdle in robotics," said Lerrel Pinto, an assistant professor of computer science at NYU. “Current sensors are either too expensive, offer poor resolution, or are too cumbersome to custom robot.

"ReSkin has the ability to overcome many of these problems."

ReSkin, which will be about 2-3 mm thick, can be used for more than 50.000 interactions. An added bonus is its relatively inexpensive production (about $ 6 for 100 units).

ReSkin's touch features make it particularly capable of completing tasks that would require a human hand, such as "using the key to unlock a door or grab delicate objects such as grapes or blackberries".

I do not know how you hear all of the above, but I am personally very concerned about the fact that Zuckerberg's skin will be based on "self-supervised learning models", while the first learning data will come from a company like Facebook.