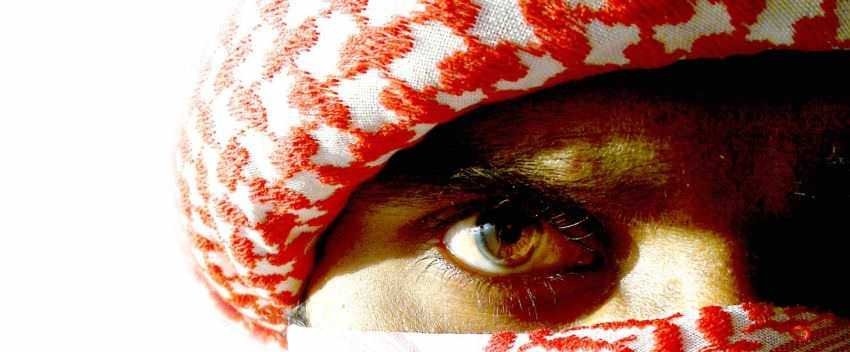

Following intense criticism from both EU leaders and its own community, Facebook is preparing artificial intelligence to fight the exchange of messages by terrorists and, in general, to curb any propaganda on its platform.

The AI technology it will not only be applied to identify Muslim extremists such as ISIS, but to any group that promotes violent behavior or engages in acts of violence. A broader definition of terrorism could include violent gang activity, drug trafficking, or white nationalists who support violence.

Facebook is currently testing the usage processingand natural language analysis by adding functions that identify brute force publications based on words used by accounts that have already been suspended.

"Today we are experimenting with text analysis that we have already removed to identify terrorist organizations such as ISIS or Al Qaeda. "We're trying to identify text messages using an algorithm that is still in its infancy," said Monika Bickert, director of global policy management and Brian Fishman, director of counterterrorism policy, in a Facebook blog post. .

The social network highlighted a number of initiatives it has already taken to control extremist content, such as using technology for recognition terrorist-related photos and videos, deleting fake accounts from Facebook and seeking advice from a panel of counter-terrorism experts.

Earlier this month, following the terrorist attack in London that killed seven people, British Prime Minister Theresa May called on nations to reach international agreements to "regulate cyberspace, to prevent the spread of extremist and terrorist planning". Facebook to take immediate action, Theresa May partly blamed the social network for the crime, because its platforms give terrorists a "safe place" that helps them function.

In March, a German minister said companies such as Facebook and Google could face fines worth $ 53 million unless they hide hate publications.

Shortly after the events in May, Facebook's policy manager, Simon Milner, said the company would make its social network a "hostile environment" for terrorists.

Last month, Mark Zuckerberg said his company plans to hire 3.000 additional people for the group Facebook Community Operations, by 4.500 he is currently employing. The team evaluates content that is potentially inappropriate or violates Facebook's policy.

With the latest social blog blog publishing, Facebook asks for help from anyone who thinks it can help. So if you have ideas on how to stop the spread of online terrorism you can write to: hardquestions@fb.com.